overleaf template galleryCommunity articles

Papers, presentations, reports and more, written in LaTeX and published by our community.

Recent

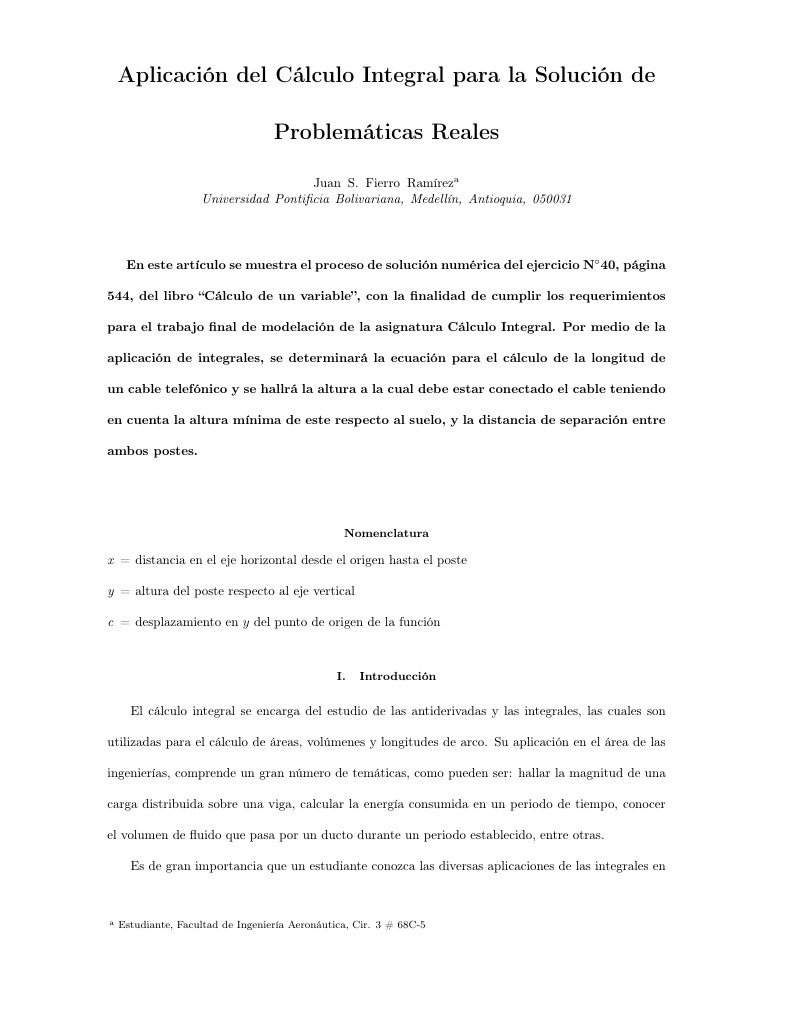

En este artículo se muestra el proceso de solución numérica del ejercicio N°40, página 544, del libro "Cálculo de un variable'', con la finalidad de cumplir los requerimientos para el trabajo final de modelación de la asignatura Cálculo Integral. Por medio de la aplicación de integrales, se determinará la ecuación para el cálculo de la longitud de un cable telefónico y se hallará la altura a la cual debe estar conectado el cable teniendo en cuenta la altura mínima de este respecto al suelo, y la distancia de separación entre ambos postes.

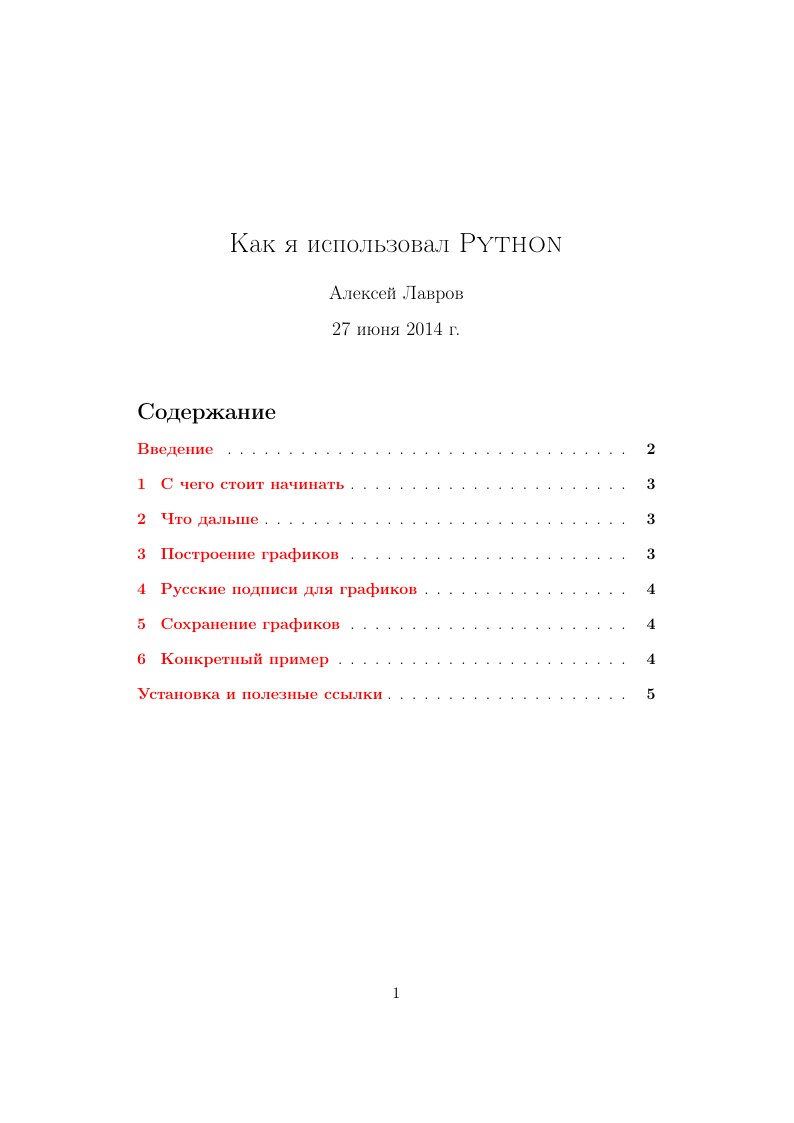

A quick guide for starting with Python

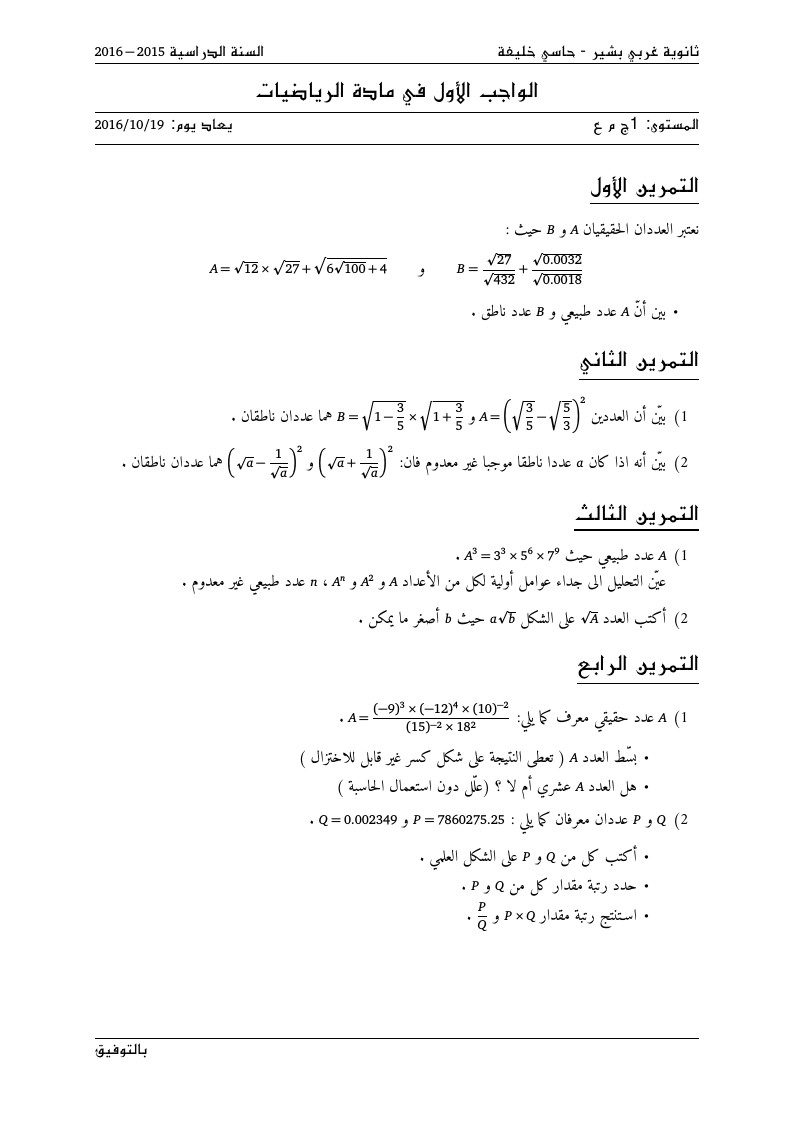

devoir 1 ere annee niveu lycee

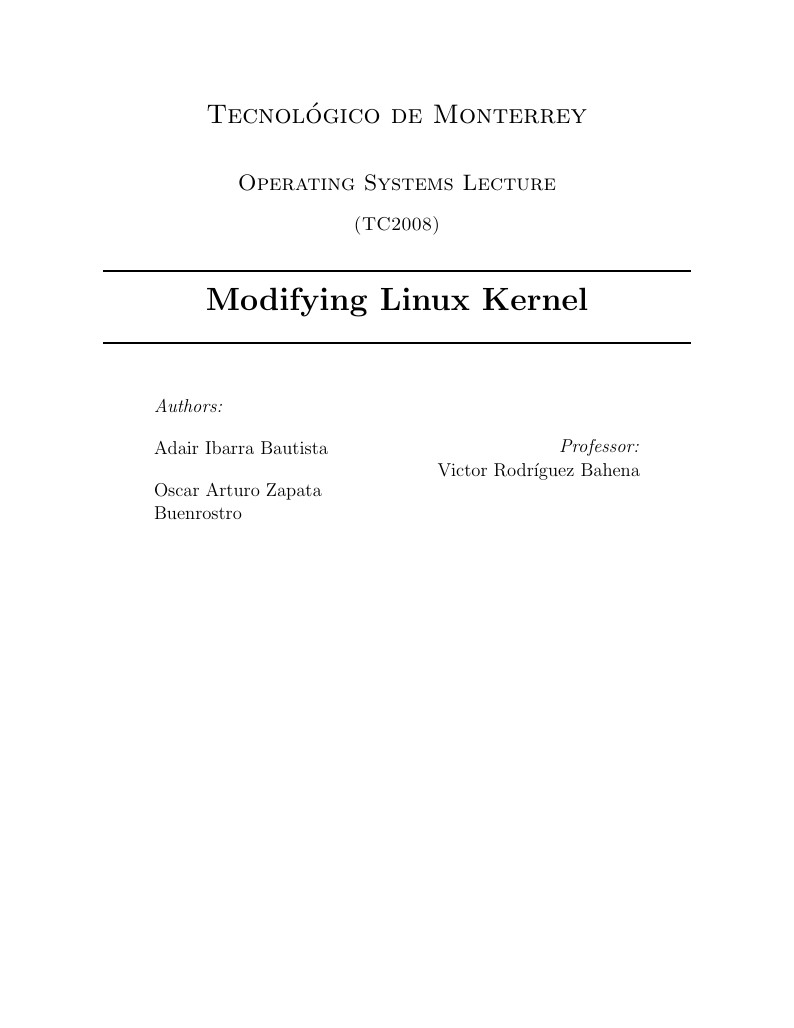

In this document we focus on modifying the Linux Kernel through memory and scheduler parameters. The main objective is to study the performance of a computer during the execution of AIO-Stress Benchmark. It was necessary to run the test several times since three of the parameter mentioned in this project were modified 5 times. After completing the test, the results were displayed on graphs, showing that all the variables have a noticeable influence on the performance of the computer.

#maths #russian #homework

The Electricity theft is an economic issue for the electricity company due to unbilled revenue of consumers who commit such action. In a regulated scenario the company needs to fit within the laws of a regulatory agency (ANEEL in Brazil) and the loss of revenue is a problem that can compromise the compliance with regulatory targets and business efficiency. The objective of this article is to analyze how the energy theft impacts on the economy of the regulated company, consumers and society as a whole. Through the economic model Tarot (Optimized Tariff) it was possible through a concise and comprehensive manner to analyze the regulated electricity market using simulations and discover in which points the company operates optimally and through it to determine the economic indicators.

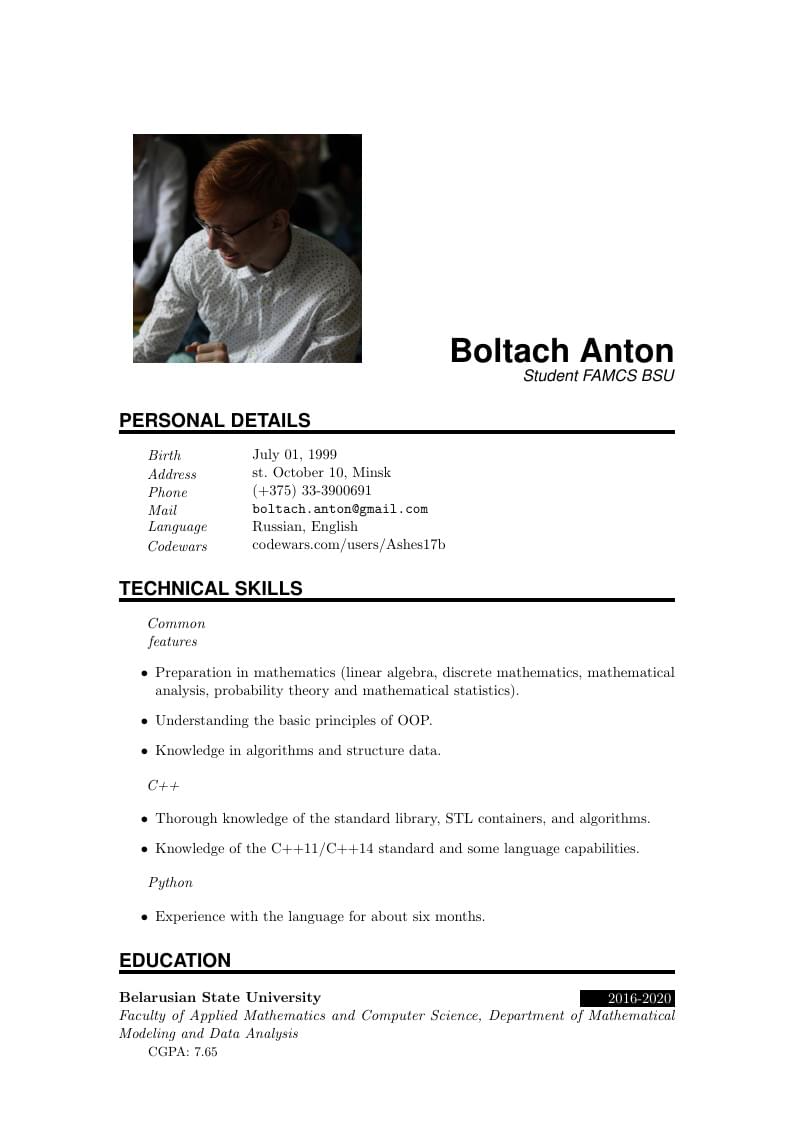

Boltach Anton's Résumé (Created with a CV template here)

Mohamed Javid's Résumé

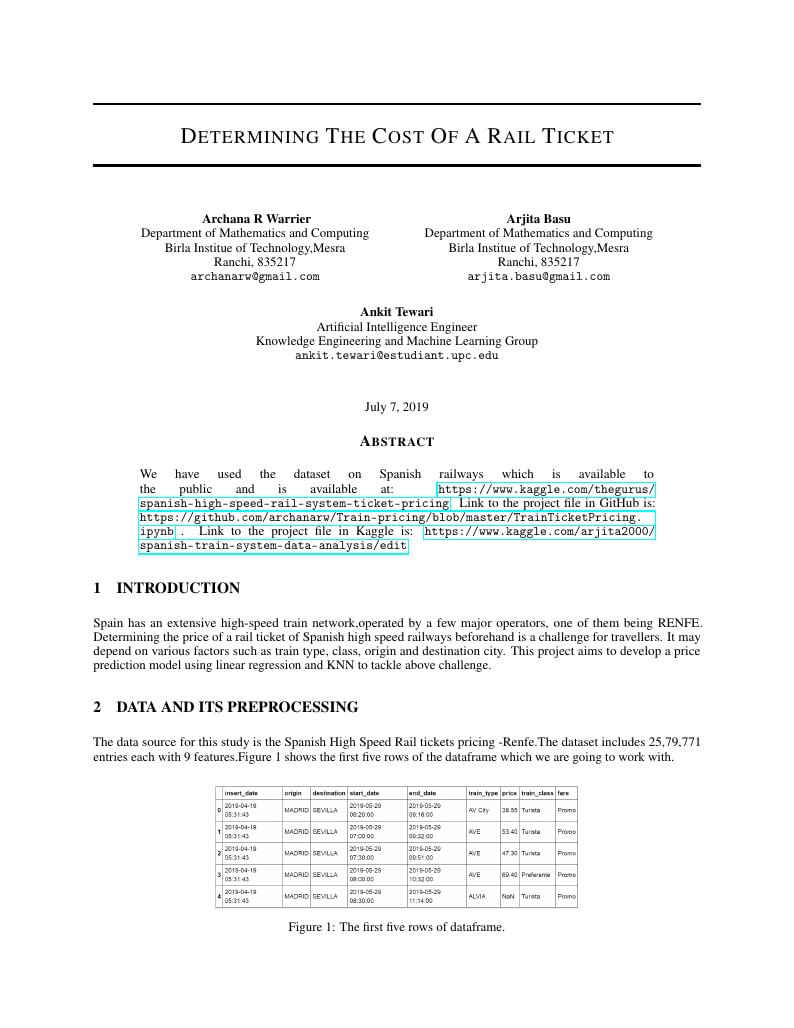

Determining the price of a rail ticket from data of Spanish railways

\begin

Discover why over 25 million people worldwide trust Overleaf with their work.